5 signs AI completed your survey, not a human

Survey fraud isn’t new. What’s new is that, increasingly, fraudulent survey responses look good.

Bots are no longer clicking randomly; they’re generating plausible human answers at scale.

If you’re running surveys today, especially with open-ended questions, here are five warning signs your respondents may not be human respondents at all.

1. Open-ended responses are fluent but empty

The answers look great at first glance.

They’re articulate. They use proper grammar. They stay neatly on topic.

And yet, there’s nothing there.

No personal details. No friction. No lived experience. No mess.

AI is excellent at producing generalized language that sounds thoughtful. It’s terrible at saying something specific.

Humans ramble. They overshare. They get oddly precise about irrelevant details.

If your open-ends feel like executive summaries of feelings rather than actual feelings, that’s your first red flag.

2. Tone and structure are strangely consistent

Look across responses instead of reading them individually.

Do they:

- Use similar sentence lengths?

- Follow the same logical structure?

- Sound like slightly edited versions of the same voice?

That’s not consensus, that’s generation.

Real humans vary wildly in how they write, even when they agree with each other. Some respond with bullet points, some with fragments, and some with a single angry sentence typed on a phone.

AI, on the other hand, is very good at being consistently reasonable.

3. Answers perfectly track the question wording

AI responses tend to mirror the prompts that produced them.

You’ll see keywords reused verbatim, questions restated as answers, and polite compliance without deviation.

For example: “I agree that the feature is valuable because it improves efficiency and usability.”

That’s not how people talk. That’s how systems respond.

Humans paraphrase. They misunderstand. They challenge the premise. They answer the wrong question with confidence.

AI answers the exact question you asked, and nothing more.

Perfect alignment is not authenticity.

4. Speed is inhuman but consistent

Fast completes alone are nothing new. What’s new is fast completes with high-quality-looking open-ends and almost no variance.

AI doesn’t pause to think. AI doesn’t reread the question. AI doesn’t get distracted by Slack.

You may see:

- Very short completion times

- Tight clustering around the same duration

- Fluent open-ends produced at scale

Any one of these can happen with humans. All of them together should make you uncomfortable.

5. Responses avoid strong emotion or contradiction

AI defaults to balance, with measured tone and careful phrasing.

Real people are not balanced.

They exaggerate. They contradict themselves. They express irritation, enthusiasm, confusion, and certainty – sometimes all at once.

Emotion is noise. And noise is human.

When every response feels calm, fair, and emotionally regulated, you’re not looking at insight, you’re looking at smoothing.

The real risk of AI responses

AI doesn’t just pollute data. It smooths it, and smoothed data:

- Inflates agreement

- Reduces variance

- Dampens edge cases

- Pushes teams toward safe, consensus-driven decisions

You don’t notice the distortion immediately.

You notice it later – when the product launch underperforms, when messaging falls flat, when “everyone liked it” turns out to mean “no one cared.”

What teams should do now

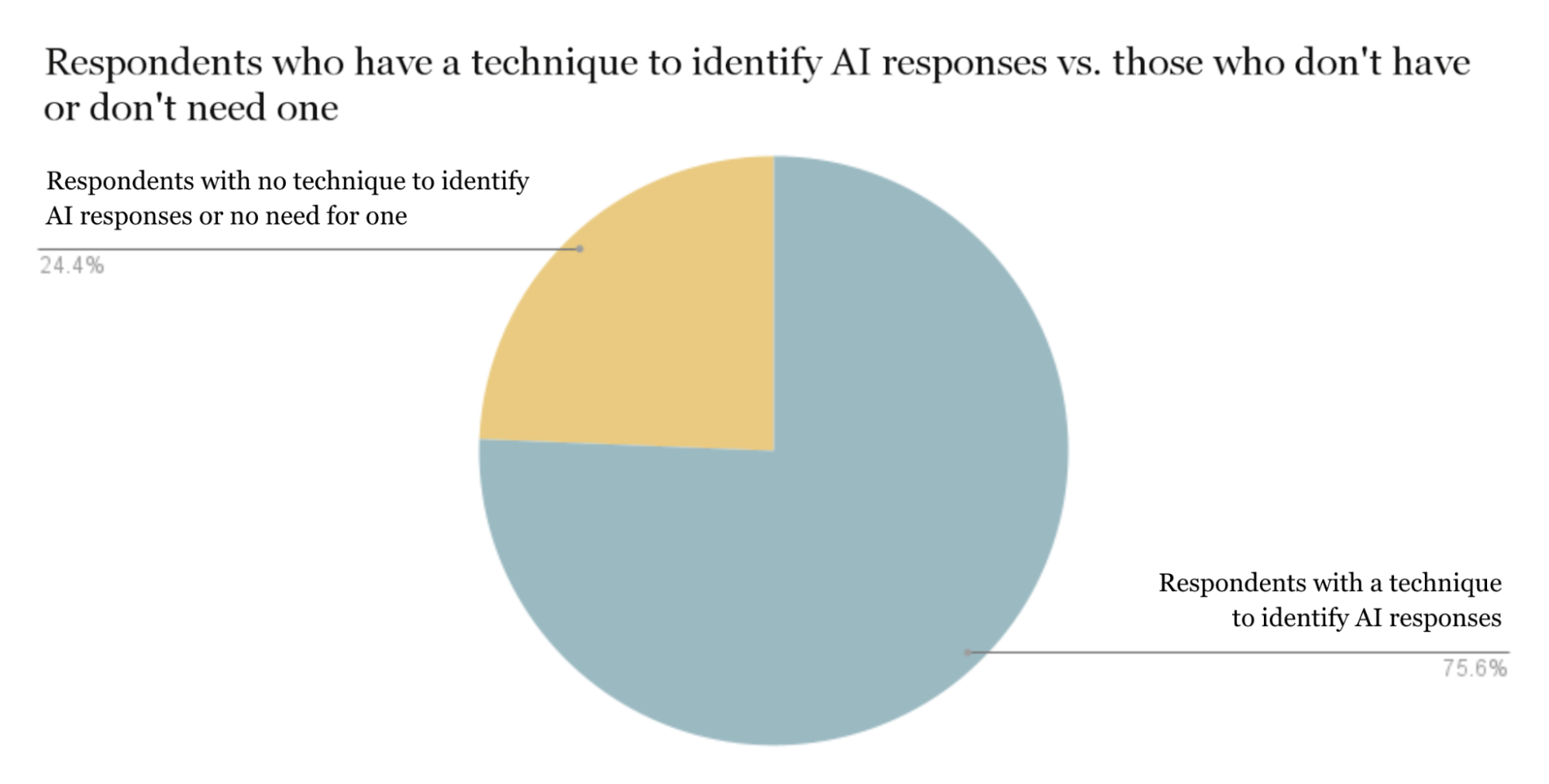

According to a recent Checkbox survey, AI is making it harder to get quality data. However, 75% of researchers are using at least one technique to identify AI responses.

If you’re running surveys in 2026:

- Treat open-ends as verification signals, not flavor text

- Layer behavioral, linguistic, and timing checks

- Design questions that reward lived experience, not abstraction

- Combine sample controls with study-design defenses

- Never rely on a single detection method

AI detection is not a tool. It’s a system, and systems need redundancy.

Use AI to generate risk scores, not binary labels:

- Linguistic uniformity score

- Prompt mirroring score

- Timing consistency score

- Experience depth score

Then:

- Flag clusters

- Remove tails

- Review samples manually

AI narrows the haystack. Humans make the final call.

But can't people create better AI that avoids detection as fraud by other AI?

The answer’s yes, but only up to a point. Think of it as an arms race between cost and believability.

In theory, someone could build AI that:

- Injects realistic typos

- Varies tone and structure

- Simulates fatigue

- Introduces contradictions

- Maintains narrative continuity across long surveys

In practice, doing this at scale is expensive and slow. Survey fraud primarily works if it’s fast, cheap, and repeatable. Is there enough money to be made to justify building complex models? Potentially, but probably not in the next couple of years.

Even if individual responses pass, clusters give them away.

At scale, AI tends to:

- Converge on similar phrasing patterns

- Avoid linguistic extremes

- Cluster around moderate sentiment

- Compress variance

Humans don’t.

Detection doesn’t ask: “Is this response AI?” It asks: “Why do 18% of respondents sound like cousins?”

Furthermore, the arms race is asymmetric. Fraud has to work perfectly every time. Detection only has to work often enough, and every added layer of realism helps detection more than fraud.

So, AI respondents will improve.

But so will:

- Behavioral gating

- Adaptive surveys

- Real-time inconsistency checks

- Device and session fingerprinting

- Study-specific defenses

The future isn’t undetectable AI respondents, it’s fraud becoming too expensive to bother with.

Of course, there’s also something to be said for good panel management. Good panels keep a close eye on panelists’ long-term behaviors and can spot suspicious patterns. They also engage with panelists and, again, can spot red flags. Think of security officers at airport gates, asking travelers where they’re going, where they’ve gone, and what they did or plan to do during their travel. This small talk can lead to various tells.

Lastly, checking panelist IDs at the time of registration can head off a lot of problems. At Ola Surveys, we believe this is fundamentally important.

But even people who are who they say they are can ruin a survey with AI-generated responses, and it’s time to get savvy on how to catch and remove them from your data and remove those respondents from consideration for future surveys.

Cam Wall is a senior executive with 20 years of experience leading research operations, data science teams, product innovation, and enterprise strategic initiatives in market research. He spent six years on the executive leadership team of a $75M insights organization and currently serves as the founder and president of Ola Surveys, where he seeks to improve the quality of survey data through better incentives and enhanced vetting of research participants.

Contact us

Fill out this form and our team will respond to connect.

If you are a current Checkbox customer in need of support, please email us at support@checkbox.com for assistance.