The ultimate guide to CSAT questions, customer satisfaction surveys, and how to improve your score

Understanding customers is fundamental to business success and sustained growth. To make effective business decisions, you need solid insights into what your customers think and feel about your brand, products, services, and support.

Customer satisfaction surveys, particularly those focused on Customer Satisfaction Score (CSAT), provide a direct line to your audience, enabling you to collect customer feedback that is both honest and actionable.

With the right questions, you can transform simple survey responses into a powerful tool for strategic improvement. Done well, customer satisfaction surveys aren't just a reporting exercise, they'll also help you:

- Measure satisfaction consistently across the customer journey

- Catch problems early, before they become customer churn

- Understand customer sentiment in context, not just as a number

- Turn survey responses into a feedback loop your teams can actually use

In this guide, you'll learn how to measure customer satisfaction using the right survey methods, how to design good customer satisfaction surveys (without annoying people or causing them frustration), and how to use the insights to improve customer satisfaction over time.

We'll also share a practical customer satisfaction survey template, targeted survey questions, and a clear way to define what a good customer satisfaction score looks like for your business.

What is CSAT?

Customer satisfaction score (CSAT) is a metric that shows how satisfied customers are with a specific interaction, product, service, or overall experience.

Most teams measure CSAT with a short customer satisfaction survey that asks customers to rate their overall satisfaction on a scale such as 1–5, 1–7, or 0–10. They then calculate their CSAT score as the percentage of satisfied customers (often the top two responses, like 4–5 on a 5-point scale) out of total survey responses.

CSAT is popular because it's fast, easy to explain and understand, and flexible enough to use at different moments in the customer journey.

How to measure customer satisfaction

There are plenty of ways to measure satisfaction, but surveys are still the most direct route to understanding customer sentiment. The trick is choosing the right survey method for the question you're trying to answer.

Below are the most common types of customer satisfaction survey methods (and how to use each without collecting noisy data).

CSAT survey

A classic CSAT survey focuses on immediate satisfaction after a defined interaction.

What it measures

- Overall satisfaction with a recent customer service experience, purchase, onboarding process step, or feature launch

How it works

- Ask a single "rate your overall satisfaction" question on a 1–5 or 1–7 scale

- Follow up with one open-text question to gather detailed feedback

How to calculate it

- CSAT (%) = (number of satisfied customers ÷ total responses) × 100

What it's best for

- Transactional moments where you want real-time customer feedback, such as after a support ticket closes or a customer service representative finishes a call

Use our customer service survey template to start gathering data and understanding where you can improve customer interactions.

Customer Effort Score

Customer effort score (CES) measures how much effort a customer had to put in to get what they needed.

What it measures

- Friction in your customer experience

- Whether customer support processes feel easy or exhausting

How it works

- Ask a "how much effort" question, such as: "How easy was it to resolve your issue today?"

- Use a 1–7 agreement scale or a 0–10 scale

What it's best for

- Customer support and self-serve flows

- Onboarding, account setup, billing changes, and other tasks where "easy" is the win

Why it's useful

- Effort is often a leading indicator for customer retention – customers can be "satisfied" and still leave if everything feels like work

Voice of the customer surveys

Voice of the customer surveys are one of the most common questionnaires to gather valuable customer feedback. They're usually designed to combine CSAT, CES, and NPS questions into one workflow.

What it measures

- The "voice" of the customer – i.e., their comprehensive opinion of everything to do with your company

How it works

- Use voice of the customer software to develop surveys consisting of all the questions you need to understand and benchmark your users' opinions at given points in their lifecycle

What it's best for

- If you need a comprehensive view of your customers, you should develop a VOC program

Why it's useful

- With so many ways to gather customer feedback, having an overarching strategy to analyze all the data as a whole is vital – VOC surveys help you do that.

Net Promoter Score

Net Promoter Score (NPS) is the "would you recommend us?" metric tied closely to customer loyalty.

What it measures

- Likelihood to recommend (a proxy for loyalty that customers feel)

- Relationship-level sentiment, not just one moment

How it works

- "How likely are you to recommend [company/product] to a friend or colleague?" on a 0–10 scale

- Group respondents into detractors (0–6), passives (7–8), promoters (9–10)

- NPS = % promoters − % detractors

What it's best for

- Relationship surveys (quarterly or biannual)

- Tracking loyalty customers over time, especially in subscription models

A quick tip: Pair NPS with one "Why did you choose that score?" question. It's the difference between a number and actionable insights.

Multi-question customer experience surveys

Sometimes one score isn't enough. Customer experience surveys combine a few targeted questions to diagnose what drives satisfaction.

What it measures

- Service quality, product quality, speed, clarity, and resolution

- Whether customers feel their expectations were met

How it works

- 3–8 questions max (keep it tight)

- Mix rating questions, multiple choice questions, and a single open-ended prompt

What it's best for

- After onboarding milestones

- After major product service updates

- When leadership needs a more complete view of the overall customer satisfaction drivers

A quick tip: You can still include a CSAT question at the top of your customer experience research survey, then use follow-up questions to explain it.

Customer feedback surveys for product and feature decisions

Product-focused customer feedback surveys help you evaluate customer preferences and uncover pain points that may not show up in support tickets.

What it measures

- Feature satisfaction

- Gaps in product service delivery

- Customer behavior motivations (why they do or don't use something)

How it works

- Ask about specific workflows, not vague opinions

- Use a mix of quantitative data (ratings) and qualitative data (open responses)

What it's best for

- Post-launch feedback (1–2 weeks after release)

- Roadmap planning

- Creating user journeys and prioritizing improvements based on real usage

Lifecycle pulse surveys

Lifecycle surveys measure satisfaction levels across stages of the customer journey, especially for new customers and existing customers.

What it measures

- How customers feel at key milestones

- Risks for customer churn and opportunities to build customer loyalty

How it works

- Send short targeted surveys at set points: onboarding complete, 30 days in, renewal window, etc.

- Compare customer segments (role, plan, region, product line)

What it's best for

- Subscription products

- High-touch services

- Any business where retention is a core growth lever

Bain argues that a 5% increase in retention can significantly increase profits in many business models, which is why measuring satisfaction in a way you can act on is worth the effort.

Always-on micro-surveys

Micro-surveys are lightweight prompts embedded in your product or site, designed to collect feedback in the moment.

What it measures

- Real-time customer feedback on a specific page, feature, or task

- Immediate customer sentiment shifts after changes

How it works

- 1 question, optional comment

- Triggered based on events (e.g., "user completed workflow," "user abandoned checkout," "ticket closed")

What it's best for

- High-traffic digital products

- Continuous improvement programs where identifying behavioral trends matters

How to use customer satisfaction surveys effectively

Implementing customer satisfaction surveys is less about sending more surveys and more about sending the right one at the right time.

Here's how to make customer feedback surveys genuinely useful and easier for customers to complete.

Start with the moment you're measuring

Before you write survey questions, decide which "type of customer satisfaction" you're trying to understand:

- Transactional satisfaction – "How was that interaction?"

- Product satisfaction – "How is the product or service performing in real life?"

- Relationship satisfaction – "How do you feel about us overall?"

- Support satisfaction – "How was your recent customer service experience with the customer support team?"

Each type maps to different moments in the customer journey, and different survey methods (CSAT, CES, NPS, multi-question CX).

Place surveys at meaningful points in the customer journey

Good customer satisfaction surveys align with real decision points:

- After onboarding process milestones (activation, first value moment)

- After support interactions (ticket resolved, live chat ended)

- After a major product launch (once customers have time to use it)

- Before renewal (to understand retention risk early)

- After renewals or upgrades (to validate value delivered)

When you plan touchpoints like this, you're not just collecting data, you're creating user journeys where feedback becomes part of the experience.

Keep surveys short, mobile-friendly, and focused

Most customers will answer on mobile devices, between other tasks.

A few practical rules:

- One primary question per survey (CSAT, CES, or NPS)

- One optional open-text follow-up to gather authentic feedback

- Avoid double-barreled questions (two topics in one)

- Use clear labels (what does a "4" mean?)

- Maintain focus on what you can actually change

Make sure you use a survey provider with mobile-friendly surveys, like Checkbox. Request a demo of Checkbox today to see how it could work for you.

Use logic flows and randomization to reduce bias and fatigue

If you want more honest, detailed feedback, the experience of taking the survey matters.

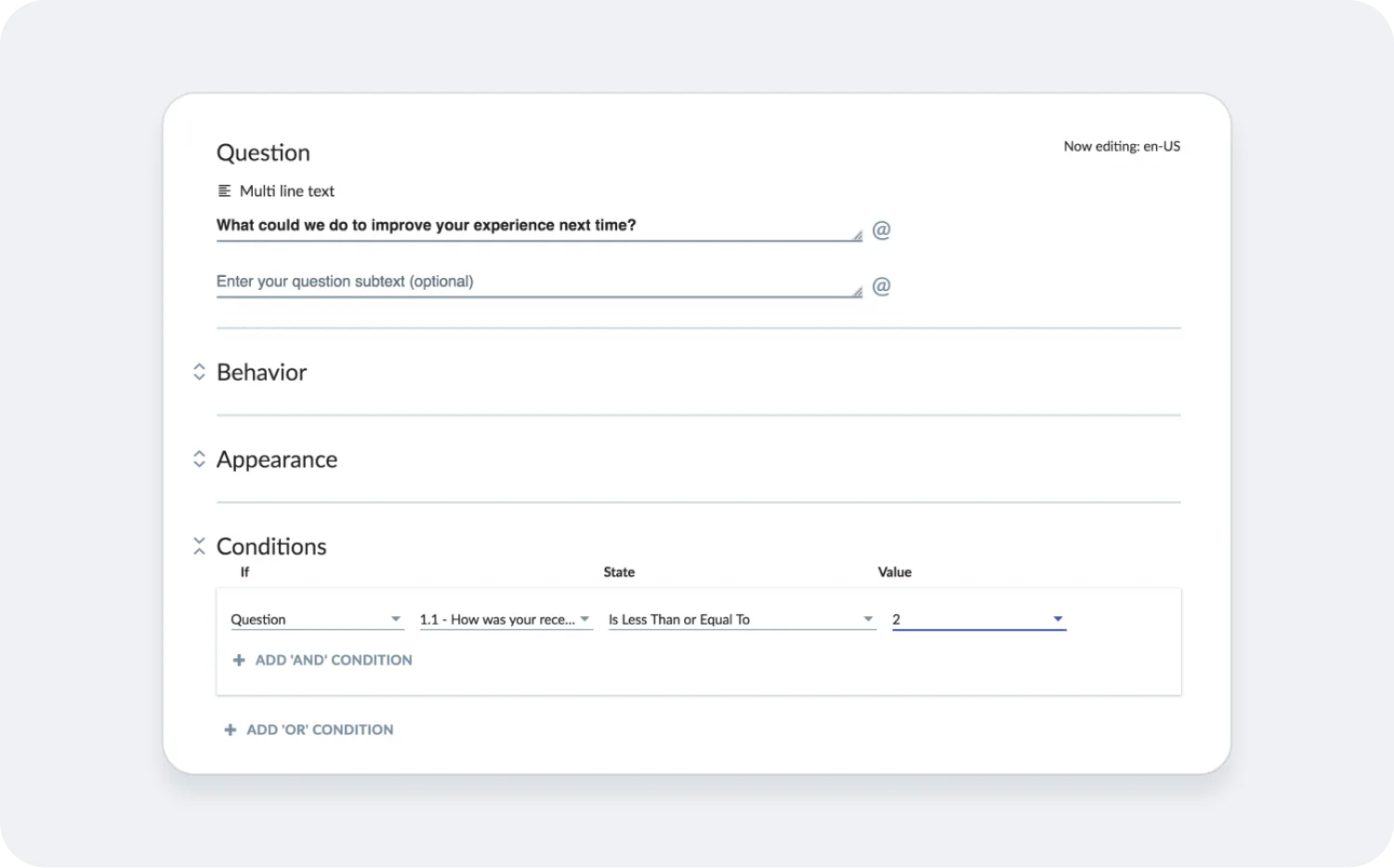

Two dynamic survey features that make a noticeable difference are survey logic and branching, and question randomization.

Survey logic and branching features enable you to show only relevant questions based on earlier answers, so customers don't waste time on things that don't apply. Checkbox supports survey logic using conditions and branching, which helps you tailor the flow for different customers and scenarios.

Randomization means you can rotate answer choices or question order (when appropriate) to reduce order bias and increase response quality, especially in longer customer experience surveys. Many survey platforms, like Checkbox, support question types and setups designed for structured, unbiased measurement.

The result is a survey that feels shorter than it is, and survey data that's cleaner.

Use a simple customer satisfaction survey template

If you need a quick starting point, here's a customer satisfaction survey template you can adapt for most teams:

- CSAT question

"Overall, how satisfied are you with your experience today?" (1–5 scale) - Driver question (choose one based on the context)

- Support: "How satisfied are you with the help from our customer service team?"

- Product: "How satisfied are you with the product or service you used today?"

- Effort: "How easy was it to complete your task?"

- Multiple choice questions (optional)

"What was the main reason for your rating?"- Speed of service

- Clarity of communication

- Product quality

- Issue resolution

- Other [with text box]

- Open-ended question

"What's one thing we could do to improve?"

If you want a ready-made version, Checkbox offers a Customer Satisfaction (CSAT) Survey Template you can customize for your own workflows.

Close the loop with customers and teams

Surveys work best when customers can see that feedback leads somewhere.

A strong feedback loop includes:

- Acknowledgment – "Thanks, we heard you."

- Action – changes based on patterns, not one-off comments

- Follow-up – reaching back out when you fix a known issue

- Internal routing – ensuring the right team sees the right feedback (product, support, onboarding, leadership)

That's how you move from collecting feedback to building loyal customers.

17 great customer satisfaction survey questions

Below are 17 customer satisfaction survey questions you can use across support, product, onboarding, and relationship check-ins. Each of these open-ended, closed-ended or multiple-choice questions comes with a guide on when to use it, so you can stay targeted on getting actionable insights.

- "Overall, how satisfied are you with your experience today?" (Likert scale question from "Very satisfied" to "Very unsatisfied")

When to use this question: After a key interaction (purchase, support resolution, onboarding milestone). This is your core CSAT question to measure satisfaction.

- "Please rate your overall satisfaction with [product/service]." (Likert scale question from "Very satisfied" to "Very unsatisfied")

When to use this question: Product usage moments or after a project delivery for services. Useful for tracking overall satisfaction by customer segments.

- "How well did we meet your customer expectations today?" (Likert scale question from "Exceeded expectations" to "Fell short of expectations")

When to use this question: After launches, deliveries, or customer support outcomes where expectations matter more than speed.

- "How would you rate the quality of the product or service you received?" (Likert scale question from "Excellent" to "Poor")

When to use this question: Post-purchase, post-implementation, or after a completed service engagement to measure product quality and service quality.

- "How satisfied are you with the speed of our response?" (Likert scale question from "Very satisfied" to "Very unsatisfied")

When to use this question: After a recent customer service experience, especially for customer support channels with SLAs.

- "How satisfied are you with the clarity of our communication?" (Likert scale question from "Very satisfied" to "Very unsatisfied")

When to use this question: Support, onboarding, and complex processes (billing, security reviews, implementation).

- "Did we resolve your issue today?" (Yes/No)

When to use this question: Transactional support surveys. Pair with an open-ended follow-up if "No" using survey logic.

- "How satisfied are you with your customer service representative's support?" (Likert scale question from "Very satisfied" to "Very unsatisfied")

When to use this question: After calls, chats, or casework where a specific person's help shaped the experience.

- "How much effort did it take to get your issue resolved?" (Likert scale question from "Very low effort" to "Very high effort")

When to use this question: CES-style measurement for customer service team performance and self-serve workflows.

- "How easy was it to find what you needed?" (Likert scale question from "Very easy" to "Very difficult")

When to use this question: In your knowledge base, help center, in-app navigation, or website journeys where customer behavior shows a drop-off.

- "Which part of the process felt most frustrating?" (Multiple choice + 'Other')

When to use this question: When you need to pinpoint pain points quickly. Great for onboarding process reviews and complex workflows.

- "What was the primary reason for your rating?" (Multiple choice + 'Other')

When to use this question: Any CSAT survey where you want structured, actionable data at scale.

- "What's one thing we could do to improve customer experience?" (Open field)

When to use this question: Always. Keep it optional, and you'll still gather detailed feedback from motivated customers.

- "How likely are you to continue using us over the next 3 months?" (Likert scale question from "Very likely" to "Very unlikely")

When to use this question: Early warning signal for customer retention risk, especially with existing customers. Make sure you follow it with a question asking "Why?"

- "How likely are you to recommend us to a colleague or friend?" (Likert scale question from "Very likely" to "Very unlikely")

When to use this question: Relationship surveys and loyalty tracking (Net Promoter Score).

- "Which features matter most to you right now?" (Multiple choice + 'Other')

When to use this question: Evaluating customer preferences during roadmap planning, pricing changes, or segmentation work.

- "If you could change one thing about your experience, what would it be?" (Open field)

When to use this question: When you want authentic feedback that points to the next best improvement.

If you're using these as customer feedback survey questions in a longer form, use logic flow and branching to show only relevant questions based on earlier answers. It keeps the experience clean and helps you collect feedback without burning people out.

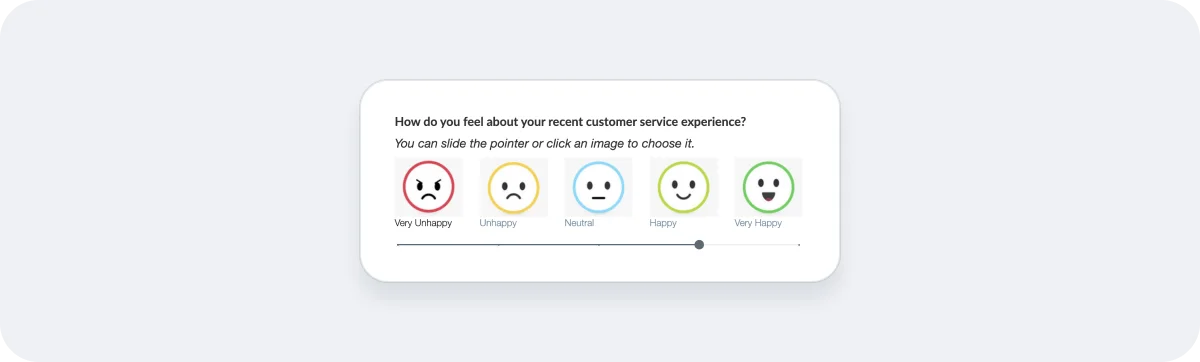

On Checkbox, you can also use slider questions and images to make it even simpler for respondents to answer satisfaction questions.

What is a good customer satisfaction score?

A "good" customer satisfaction score depends on your context. Industry benchmarks can help, but your most meaningful benchmark is your own trend line, measured consistently.

Here's a practical way to define what "good" looks like for your company:

- Decide what your CSAT score actually represents

A CSAT score is only comparable if you keep these consistent:

- The question wording

- The scale (1–5, 1–7, 0–10)

- The timing (immediately after support vs. monthly relationship survey)

- The definition of "satisfied" (top 1 box vs. top 2 box)

Even small wording changes can shift customer sentiment and survey responses.

- Establish a baseline for each journey stage

Your support CSAT, onboarding CSAT, and product CSAT may be very different. That's normal.

Start by measuring each consistently for 4–8 weeks, then set baselines by:

- Customer segments (new customers vs. existing customers)

- Channel (chat vs. phone vs. email)

- Region or business unit

- Product line or plan tier

That baseline becomes your reference point for business growth initiatives.

- Look beyond the average

Two teams can have the same CSAT score with very different realities.

Add these checks:

- Distribution – are you getting lots of neutrals, or polarized feedback?

- Volume – is the sample size stable enough week to week?

- Comments – what themes show up in qualitative data?

- Drivers – what factors correlate with low satisfaction levels?

Used in combination with other checks suggested on this list, you'll be able to determine the actual satisfaction levels.

- Use external benchmarks carefully

Benchmarking can be useful for context, especially if you're competing in a mature industry.

If you need a broader comparison point, programs like the American Customer Satisfaction Index (ACSI) exist to measure customer satisfaction across industries, but your internal consistency still matters most for decision-making.

How to use customer satisfaction surveys to improve your score

Measuring is the easy part. Improving takes a repeatable system.

Here's a simple, high-impact way to turn customer surveys into better outcomes.

Build a tight feedback loop

A feedback loop is the process of collecting feedback, acting on it, and validating whether the change worked.

A practical loop looks like this:

- Collect – targeted surveys at key moments in the customer journey

- Analyze – themes, trends, identifying behavioral trends, and segment differences

- Prioritize – choose improvements that are feasible and high-impact

- Act – fix the issue, update the process, and coach the team

- Re-measure – run the same question again to see if CSAT improves

When you repeat this loop monthly or quarterly, CSAT becomes a management tool, not a vanity metric.

Route feedback to the people who can fix it

Low satisfaction isn't always a customer service problem.

Route by category:

- Product issues – bugs, missing features, usability pain points

- Support issues – response time, empathy, resolution quality

- Onboarding issues – unclear steps, too many handoffs, confusing setup

- Policy issues – pricing changes, billing friction, contract confusion

If you tag survey data correctly, you can turn open comments into actionable data quickly.

Treat follow-up as part of the experience

If someone is unhappy, follow-up is where you regain trust.

A simple playbook:

- Detractors or low CSAT – personal outreach within 24–72 hours

- Neutral responses – ask one clarifying question to understand what would shift them

- Promoters – ask what they value most and use it to reinforce what's working

This is also where you can reduce customer churn and build customer loyalty through consistent, human responses.

Use insights to improve processes, not just messages

It's tempting to respond to bad scores with better scripts.

Sometimes that helps. Often the fix is structural:

- Reduce handoffs in support workflows

- Improve self-serve documentation

- Simplify onboarding steps

- Fix recurring product quality issues

- Clarify what "success" looks like for new customers early

When you use survey insights to change the system, satisfaction improves naturally.

Use enterprise-grade tooling when feedback becomes mission-critical

Once you're collecting customer feedback across multiple teams, channels, and regions, spreadsheets stop being manageable.

An enterprise feedback management strategy helps you:

- Centralize customer feedback surveys across the organization

- Automate distribution at key lifecycle events

- Segment and filter survey responses in useful ways

- Generate real-time reporting and insights

Checkbox's enterprise feedback management platform is built for exactly that, with flexible deployment options and a focus on secure, scalable survey programs.

Final thoughts

CSAT is one of the cleanest ways to understand how customers feel, but it only works if you respect the context.

Measure customer satisfaction at the moments that matter. Use the right survey method for the job. Keep questions simple, flow smart, and follow up like a real human. Then use what you learn to improve the product or service, the support experience, and the customer journey as a whole.

That's how customer satisfaction surveys stop being "just another form" and start becoming a reliable source of improvement.

Customer satisfaction survey FAQs

The "best" CSAT survey tools depend on where you collect feedback (support, product, email) and how complex your program is (one team vs. enterprise-wide). In practice, the best tools share a few must-haves:

- Logic/branching so customers only see relevant questions (better experience, cleaner survey data)

- Randomization options (useful in longer surveys to reduce order bias)

- Automation and triggers (send targeted surveys at key lifecycle moments)

- Reporting/analytics that turn feedback into actionable data (not just charts)

If you're choosing today, a simple way to decide is: start with where you'll run the CSAT survey (support vs. product vs. lifecycle), then pick the tool that makes it easiest to collect customer feedback, segment it, and close the feedback loop with your customer service team and product owners.

For those companies that value data sovereignty as a feature of their enterprise feedback programs (centralized, secure, multi-team), Checkbox is a good solution. Checkbox is built for managing surveys at scale, with survey logic (conditions and branching) and enterprise-focused features like security, customization, and analytics/reporting for turning feedback into change.

Good CSAT questions do two jobs at once:

- They make it easy for customers to answer (fast, clear, mobile-friendly).

- They give you actionable insights you can actually use to improve customer satisfaction.

A strong, reliable core question is:

- "Overall, how satisfied are you with your experience today?"

Then add one follow-up that turns the score into customer feedback:

- "What's the main reason for your rating?" (multiple-choice question + "Other")

- "What's one thing we could do to improve?" (open field, to gather detailed feedback)

If you want more targeted customer satisfaction survey questions, tailor them to the moment in the customer journey:

- After a recent customer service experience: "How satisfied are you with the support you received?"

- After onboarding: "How satisfied are you with the onboarding process so far?"

- After a launch: "How satisfied are you with the new [feature]?"

- When effort matters: "How easy was it to resolve your issue?"

The key is to keep the survey focused: one primary satisfaction question, one driver question, and (optionally) one open text prompt.

A CSAT survey (Customer Satisfaction Score survey) is a short customer satisfaction survey used to measure customer satisfaction after a specific experience – like a support interaction, onboarding step, purchase, or feature launch. These surveys typically ask customers to rate their overall satisfaction on a simple scale (often 1–5), then use those survey responses to calculate a customer satisfaction score.

Most teams calculate CSAT as the percentage of satisfied customers (for example, people who answered 4–5 on a 5-point scale) out of all responses.

Contact us

Fill out this form and our team will respond to connect.

If you are a current Checkbox customer in need of support, please email us at support@checkbox.com for assistance.